Review Article

Crowdsourcing: A Systematic Literature Review and Future Research Agenda

- Abstract

- Full text

- Metrics

Crowdsourcing is very relevant in the literature, because of its impact on the performance of organisations in the new virtual environment. Its importance is crucial in a world where e-commerce, new technologies and artificial intelligence are becoming more and more prevalent, and where organizations require the generation of new data and ideas that increasingly come from outside the organisations. This paper reviews the literature on crowdsourcing, analysing the main trends in the area. To do so, the paper uses different bibliometric techniques, as well as an in-depth literature review. The results indicate that the study of crowdsourcing has moved from a conceptual review to increasingly practical applications. Management, Computer science and secondly Geography and Psychology remain the main areas of research in the topic. Diverse sub-areas linked to "task analysis", "citizen science", "social media", and "machine learning" are preponderant. The paper highlights the managerial side of Crowdsourcing, with innovations related to the evolution of technological applications related to the new ubiquitous web, and the personal aspects of the participants. The work helps to observe research trends and the various uses, potentials and key elements of their implementation, which is important for researchers and practitioners.

Crowdsourcing: A Systematic Literature Review and Future Research Agenda

Fernando J. Garrigos-Simon*, Yeamduan Narangajavana-Kaosiri

-Departamento de Organización de Empresas, Universitat Politècnica de València. Camino Vera S/N, Valencia, Spain

-Departamento de Comercialización e Investigación de Mercados, Universitat de València. Av. dels Tarongers, s/n, Valencia, Spain

| ABSTRACT |

Crowdsourcing is very relevant in the literature, because of its impact on the performance of organisations in the new virtual environment. Its importance is crucial in a world where e-commerce, new technologies and artificial intelligence are becoming more and more prevalent, and where organizations require the generation of new data and ideas that increasingly come from outside the organisations. This paper reviews the literature on crowdsourcing, analysing the main trends in the area. To do so, the paper uses different bibliometric techniques, as well as an in-depth literature review. The results indicate that the study of crowdsourcing has moved from a conceptual review to increasingly practical applications. Management, Computer science and secondly Geography and Psychology remain the main areas of research in the topic. Diverse sub-areas linked to "task analysis", "citizen science", "social media", and "machine learning" are preponderant. The paper highlights the managerial side of Crowdsourcing, with innovations related to the evolution of technological applications related to the new ubiquitous web, and the personal aspects of the participants. The work helps to observe research trends and the various uses, potentials and key elements of their implementation, which is important for researchers and practitioners. |

Introduction

Crowdsourcing has emerged as a vital phenomenon in today's Management Science. The environment of technological development of mobile and smart applications, together with trends towards globalisation and internationalisation, have fostered its growth (Wang et al., 2019). Specifically, nowadays “Social media platforms appeared a key lever to augment the crowdsourcing activities” (Ali et al., 2023, p. 11). In the new framework, Crowdsourcing is crucial for the development of ideas, for problem solving, and for redefining the conception and development of organizations with a wide scope. Given its relevance, Crowdsourcing has become essential for scholars and practitioners (Lenart‑Gansiniec et al., 2022), since Howe (2006) introduced the concept in the academic literature. Its study has experienced significant growth (Ghezzi et al., 2018), and nowadays has great impact. This article studies the literature on Crowdsourcing, and makes a bibliographic analysis of this literature to provide an integrative review of crowdsourcing research, and to visualize emerging trends, uses and research possibilities in the field.

Crowdsourcing, also known as "massive outsourcing" or "voluntary outsourcing" (Garrigos et al., 2012; Garrigos & Narangajavana, 2015) is defined by Howe (2006, p. 1) as "taking a function once performed by employees and outsourcing it to an undefined (and generally large) network of people in the form of an open call". Estelles-Arola and González-Ladrón-De-Guevara (2012), defined it as an online distributed participatory process that allows a task to be undertaken for the resolution of a problem: an activity in which an individual, or any kind of organization proposes to a group, through an open and flexible call, the voluntary realization of a task. In line with these previous conceptions, and following Garrigos et al. (2017), we define crowdsourcing as the action of taking a specific task or job, whether or not previously performed by an employee of an organization or by a designated agent (such as a contractor, an external worker or a supplier), and subcontracting it, through an “open call” to a large group of people (inside or outside the organization), a community or the general public via the Internet, for compensation that does not have to be financial.

The notion has several characteristics that explain our definition: a) It is based on the wisdom of the many, or on co-working. b) It is conceived by focusing on individual entities rather than on companies as a source of innovation and resources. In this sense it breaks down the boundaries of companies, representing an evolution of the outsourcing process, with a new conception of organizational structures towards open structures. c) The crowd includes many members and stakeholders, with diverse characteristics (individuals of varied knowledge, heterogeneity and number). d) Participants may be external but also internal to the organizations. e) Campaigns are sometimes limited or targeted to a few specific individuals. f) Includes the participation of the crowd as well as non-humans. g) The crowd can participate through many resources (they can contribute their work, money, knowledge and/or experience). h) Includes the use of the Internet, new technologies and the virtual world. i) It can be applied to many tasks, of variable complexity and modularity, in the value chain. j) It can include tasks not previously considered by companies or previously performed by employees. k) It can be proposed by an individual, an institution, a nonprofit organization or a company. l) It can be applied to any industry or sector. m) It has appeared in a variety of academic disciplines, with different approaches. n) The activity has mutual benefits: the user has some compensation while the crowdsourcer obtain and use to his or her benefit what the user has contributed. o) Compensation to the crowd for their participation is not only economic (users can also receive social recognition, self-esteem, or the development of individual skills), representing a change in the nature of working relationships. p) In its application it is important to consider the management of ethical issues related to the fair relationship with participants.

Today, the analysis of crowdsourcing is very broad, emerging as an important research topic. It is receiving increasing attention in a wide number of fields, specially within the broad field of management (Ghezzi et al., 2018). Moreover, since a practical point of view, Crowdsourcing improves the competitiveness of firms, as it can provide relatively low-cost solutions (Howe, 2006), and cheaper, better, higher quality and faster solutions (Blohm et al., 2018). It can also foster positive network externalities, reduce dependency and information asymmetries with suppliers, save time in data collection, increase motivation and incentives or accelerate the speed of innovation, as it involves voluntary participation and allows access to a large number of public volunteers (Brabham, 2008; Kleemann et al., 2008; Wang et al., 2019). In this regard, Lebraty and Lobre-Lebraty (2013) highlight three main sources of value creation: cost reduction, innovation development and authenticity. Moreover, market orientation and organizational change promoted by crowd participation, and increased exposure to new technologies, can produce significant changes that also affect performance (Garrigos-Simon & Narangajavana, 2015). As indicated by Garrigos et al. (2017), although not always capable of directly improving performance, crowdsourcing can have an indirect influence through the development of distinctive competencies and innovation (Xu et al., 2015) or through changing organizational and customer behaviors.

However, although research on crowdsourcing has grown exponentially in recent years, and in spite of its practical relevance, research on crowdsourcing is still limited (Garrigos et al., 2017). Furthermore, very little is known about the scope of research on crowdsourcing in the academic literature in general, or its peculiarities, the state of the art and future trends in the global or management literature.

One mechanism to analyze this situation is the use of bibliometrics, which we complement with visual innovations in this work. Bibliometrics is an interdisciplinary science that quantitatively analyzes knowledge or bibliographic content, with mathematical and statistical methods (Broadus, 1987, Liao et al., 2018). The method, started since 1917 (Osareh, 1996) is widely used nowadays to analyse the development of different fields or areas. Its ability to study specific areas of research by drawing useful conclusions from them (Liao et al., 2018) and the information, highly objective, very compact and easy to handle (Diem & Wolter, 2013) stress the advantage of using it.

There are several bibliometric studies in areas closely related to Crowdsourcing, or that analyze the Crowdsourcing literature using other techniques, Moreover, there are bibliometric analyses observing crowdsourcing in specific fields such as urban sustainability governance (Certoma et al., 2015), public health (Wang et al., 2019) or urban planning (Liao et al., 2019), while Yin et al. (2020) analyzes task recommendations in crowdsourcing systems More recently Lenart-Gansiniec et al. (2022) analyze crowdsourcing in science and Jiang et al. (2023) in smart cities. However, the only blibliometric documents we have found that focuses on the study of the field of crowdsourcing in general is that of Malik et al. (2019), and the one by Cricelli et al. (2022). Nevertheless, the work of Malik et al. (2019) is very small and limited, as it only offers, through only 7 pages, a synthetic presentation of the publications related to crowdsourcing in the Science Citation Index Expanded of the Web of Science. Moreover, Cricelli et al. (2022) convines Crowdsourcing with open innovation, focusing more in the intersection of both topics than in Crowdsourcing as a whole, and uses the literature only till 2019.

However, although there are some works that theoretically analyze the concept and its applications (Brabham, 2013; Estelles-Arolas & González-Ladrón-De-Guevara, 2012), and the reduced bibliometric by Malik et al. (2019), we could not find any comprehensive empirical bibliometric analysis of crowdsourcing in general. We also did not find any research that deals with the analysis and evolution of related topics and the most important issues concerning the field in general.

This work contributes to the academy in four main aspects. Firstly, it is the first comprehensive bibliometric analysis of crowdsourcing research in general, being able to provide new knowledge on the subject. Secondly, the analytical framework offers an in-depth understanding of the state and evolution of the crowdsourcing literature, through the analysis of its main references, sources, authors, institutions, countries and especially related keywords. The purpose is to focus in depth on the observation of its main characteristics and networks, and to identify and offer the trends, evolutions and emerging adoptions in the research. Thirdly, a methodological innovation introduced in the article, helps to compare periods, and to visualize more easily the main evolutions and trends in the area. Fourthly, the results provide ideas and possible applications of crowdsourcing in specific and concrete tasks for professionals, as well as research bases, foundations and orientations for future research.

Method

The search process is based on data provided by the Web of Scinece (WoS) Core Collection database. The bibliometric studies in the academy mainly use WoS, and Scopus databases, because of their impact and recognition (López-Meneses et al., 2015). (Google Scholar, is questioned because it incorporates unreliable references (Delgado López-Cózar et al., 2014). However, this paper only focuses on the WoS, because: Scopus is less selective (Ghezzi et al., 2018); WoS is more influential, only including the most recognized journals (Merigo & Yang, 2017), and WoS is considered the most popular in the literature, following Garrigos et al. (2018), or Merigo and Yang (2017, p. 75), as "it provides objective results that can be considered sufficiently neutral and representative of the information".

The study retrieves all papers using two keywords (a common practice in literature (e.g., Blanco-Mesa et al., 2017; Garrigos-Simon et al., 2018): "crowdsourcing" and "crowd sourcing", following Certoma et al. (2015), ("crowd-sourcing" did not add any new material). The population considers every year until December 31, 2022, and the recovery process was carried out between February and March 2023. The study reduced the total 14102 initial documents, by considering only articles, reviews, letters, and notes, to focus on the main documents (following Cancino et al., 2017c and Garrigos-Simon et al., 2018). Thus, 7231 documents were finally considered (4576 of then in the period 2018-22, or 707 in the areas of “management” & “business”), representing almost 51.28% of the original data set.

This paper uses the most common research indicators in bibliometric literature: the total number of papers published and total citations to indicate the productivity and incidence (Merigo et al., 2015; Blanco-Mesa et al., 2017); the h-index to represent the quality of a set of papers (Hirsch, 2005); the number of papers above a citation threshold (Cancino et al., 2017) to identify influential articles; the impact factor of journals, to indicate their influence on the dissemination of a research topic (Blanco-Mesa et al., 2017); and the ratio (citations/articles) to measure the impact of each article.

This study also uses science mapping, using the visualization of similarities (VOS) viewer program, a tool implemented broadly (Blanco-Mesa et al., 2017). The software analyzes the structure of citations from authors, journals, universities or countries. The work studies co-citation of references (Small, 1973) (when two papers receive a citation from the same paper), journals, and authors; co-occurrence of the “authors keywords” (the most common keywords that appear below the abstract, provided by the authors); bibliographic coupling of authors (number of references a group of papers have in common (Kessler, 1963). These analyses are the most used in bibliometric literature (Blanco-Mesa et al., 2017; Garrigos-Simon et al., 2018).

Our research also creates pioneer visual innovations, through the use of colors, to observe the comparative evolution of main keywords in different periods. The aim is to better visualize the networks and evolutions of the main themes by incorporating, in the academy, visual innovations that are made in technological and professional areas, as is the example of the developments in "business intelligence".

Results

The results focus on four analysis. Firstly, it presents the current state, situation and evolution of crowdsourcing in the literature, studying also the structure of the citations of the different documents. Secondly it reviews the main papers cited and co-cited in the field of crowdsourcing. Thirdly it analyses the main sources, observing citation and co-citation analysis. The fourth analysis examines in depth the co-occurrence of “author keywords”, analyzing its evolution in various phases. The fifth part focuses on the main authors, observing co-citation and bibliographic coupling.

Status and evolution of crowdsourcing in literature

The first two articles about crowdsourcing were published in the WoS in 2008: Albors et al. (2008), and Ågerfalk and Fitzgerald, (2008) (this year, for the first time, three proceding papers, 1 editorial material and a meeting abstract on crowdsourcing were also published). Albors et al. (2008), details the crowdsourcing cases of Innocentive and Procter & Gamble. On the other hand, Ågerfalk and Fitzgerald (2008), analyzes opensourcing, and its use.

Inmediatly, the number of papers experienced an explosive growth in number and relevance, shoing its interest by the academics (Ghezzi et al. 2018). Thus, in 2009, the number of papers (articles, reviews, letters) were 14, and they surpassed 100 papers per year (specifically 147) only four years later, in 2012, accelerating to 760 in 2017, in less than a decade since the first appearance. In the last five years, the increase continued, reaching to 1026 paper published only in 2021. However, even though being pioneers, the increase in the Business-Management area was smaller (4 published papers in 2009, but only representing around 10% of the total publications since 2010, with 707 publications in the whole period 2008-2022). Figure 1 illustrates the annual trends.

Figure 1.

The annual Web of Science (WoS) publications in crowdsourcing

The dark blue line shows the number of publications per year in WoS crowdsourcing research. The red line shows the number of publications per year in Crowdsourcing in the areas of Business and Management. WoS data.

The citations received, highlight its relevance. Table 1 shows the general structure of citations in Crowdsourcing. According to the WoS, 28 papers received more than 500 citations (0.39%), while 58.64% of the papers have 10 or more citations. The h index of all articles related to Crowdsourcing, which allows a holistic analysis of a field (Blanco-Mesa et al., 2017), is 155. Finally, the total papers of the field have more than 183,900 citations, being 22.79 the average of citations per article.

Table 1.

General structure of citations in Crowdsourcing

Crowdsourcing | ||||

Number of citations | Number of articles | Accumulated n. of articles | % Articles | % Accumulated articles |

≥500 | 28 | 28 | 0.39 | 0.39 |

≥250 | 40 | 68 | 0.55 | 0.94 |

≥100 | 186 | 254 | 2.57 | 3.51 |

≥50 | 430 | 684 | 5.95 | 9.46 |

≥25 | 779 | 1463 | 10.77 | 20.23 |

≥10 | 1528 | 2991 | 21.13 | 41.36 |

<10 | 4240 | 7231 | 58.64 | 100.00 |

Total | 7231 |

|

|

|

Source: Own elaboration based on WoS 2023

Analysis of citation and co-citation of papers

Most cited papers in crowdsourcing

Table 2 shows the most frequently cited articles about crowdsourcing. The analysis of the number of citations is the main factor to reflect the quality of an article(Liao et al., 2018), showing also its influence and popularity (Blanco-Mesa et al. 2017).

Table 2.

Top 20 papers with the most citations in Crowdsourcing

Papers with the most citation in QT | ||||||

R | Journal | TC | Article | Authors | Year | CY |

1 | JEV | 3149 | Biological properties of extracellular vesicles and their physiological functions | Yanez-Mo et al. | 2015 | 393.63 |

2 | BRM | 2102 | Conducting behavioral research on Amazon's Mechanical Turk | Mason & Suri. | 2012 | 191.09 |

3 | IJCV | 1669 | Visual Genome: Connecting Language and Vision Using Crowdsourced Dense Image Annotations | Krishna et al. | 2017 | 278.17 |

4 | SW | 1288 | DBpedia - A large-scale, multilingual knowledge base extracted from Wikipedia | Lehmann et al. | 2015 | 161.00 |

5 | JESP | 1257 | Beyond the Turk: Alternative platforms for crowdsourcing behavioral research | Peer et al. | 2017 | 209.50 |

6 | BJN | 1204 | Nanotechnology in the real world: Redeveloping the nanomaterial consumer products inventory | Vance et al. | 2015 | 150.50 |

7 | CHB | 1183 | Separate but equal? A comparison of participants and data gathered via Amazon's MTurk, social media, and face-to-face behavioral testing | Casler, Bickel, & Hackett | 2013 | 118.30 |

8 | BRM | 1149 | TurkPrime.com: A versatile crowdsourcing data acquisition platform for the behavioral sciences | Litman, Robinson, & Abberbock | 2017 | 191.50 |

9 | AIM | 1140 | Prevalence of Asymptomatic SARS-CoV-2 Infection A Narrative Review | Oran & Topol | 2020 | 380.00 |

10 | REES | 1133 | Citizen Science as an Ecological Research Tool: Challenges and Benefits | Dickinson, Zuckerberg, & Bonter | 2010 | 87.15 |

11 | BRM | 1029 | Reputation as a sufficient condition for data quality on Amazon Mechanical Turk | Peer, Vosgerau, & Acquisti | 2014 | 114.33 |

12 | CI | 990 | Crowdsourcing a word-emotion association lexicon | Mohammad, & Turney | 2013 | 99.00 |

13 | PO | 978 | Evaluating Amazon's Mechanical Turk as a Tool for Experimental Behavioral Research | Crump, McDonnell, & Gureckis | 2013 | 97.80 |

14 | BRM | 931 | Norms of valence, arousal, and dominance for 13,915 English lemmas | Warriner, Kuperman, & Brysbaert | 2013 | 93.10 |

15 | JIS | 903 | Towards an integrated crowdsourcing definition | Estelles-Arolas, & Gonzalez Ladron-de-Guevara | 2012 | 82.09 |

16 | BRM | 836 | Concreteness ratings for 40 thousand generally known English Word lemmas | Brysbaert, Warriner, & Kuperman | 2014 | 92.89 |

17 | IEEEJSAC | 796 | Adaptive Federated Learning in Resource Constrained Edge Computing Systems | Wang et al. | 2019 | 199.00 |

18 | JMLR | 758 | Learning From Crowds | Raykar et al. | 2010 | 58.31 |

19 | CACM | 702 | Crowdsourcing Systems on the World-Wide Web | Doan, Ramakrishnan, & Halevy. | 2011 | 58.50 |

20 | ARCP | 700 | Conducting Clinical Research Using Crowdsourced Convenience Samples | 100.00 | ||

Source: Own elaboration based on WoS 2023. R: Ranking; TC: Total Citations; CY: Citations per year. SC: Science; JEV: Journal of Extracellular Vesicles; BRM: Behavior Research Methods; IJCV: International Journal of Computer Vision; SW: Semantic Web; JESP: Journal of Experimental Social Psychology; BJN: Beilstein Journal of Nanotechnology; CHB: Computers in Human Behavior; AIM: Annals of Internal Medicine; AREES: Annual Review of Ecology Evolution and Systematics; ES; CI: Computational Intelligence; PO: Plos One; JIS: Journal of Information Science; JMLR: Journal of Machine Learning Research; CACM: Communications of The ACM; ARCP: Annual Review of Clinical Psychology

The most cited article in our sample is Yanez-Mó et al. (2015) (3149 citations), being also the first in citations per year (393.63). This paper, in the field of Biology, analyzes the biological properties of the Extracellular Vesicles and their physiological functions.

Mason and Suri (2012), with 2102 citations in the WoS, ranks second in number of citations, and sixth in citations per year (191.09). This article, framed in the literature of behavioral research, but with a kind of management perspective, analyzes the case of Amazon's Mechanical Turk.

Also worth mentioning is Krishna et al. (2017). This article, published in “International Journal of Computer Vision” ranks third, in number of citations (1169), and in cites per year (278.17). This paper focus on the use of crowdsourced dense image annotations for cognitive tasks, and the use of these dataset to enable the modeling of such relationships.

Also noteworthy is Oran and Topol (2020), published in “Annals of Internal Medicine”. Although being ninth in number of citations (1140), it is the second in citations per year (380.00). This document, explains how the use of tactics for public health surveillance, such as crowdsourcing digital data might be helpful to manage pandemics.

References co-citation analysis

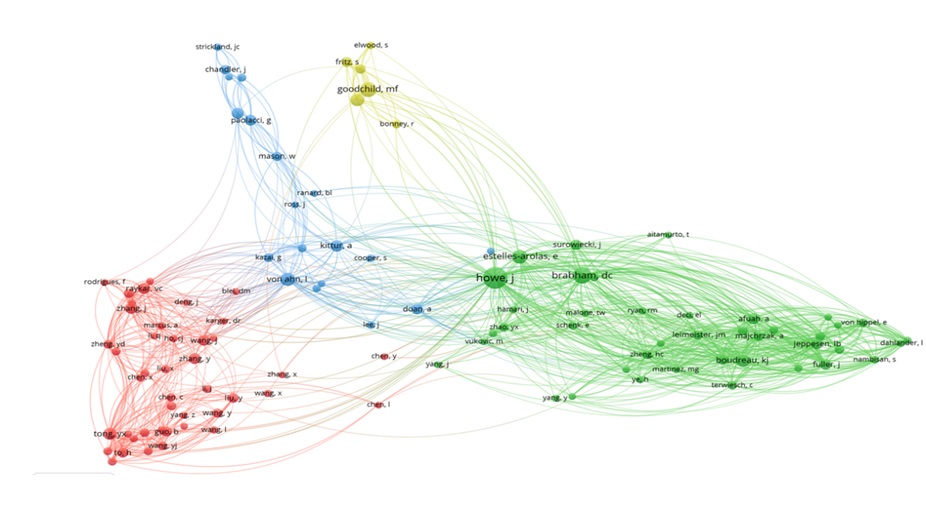

The analysis of co-citations (network of documents co-cited by our sample of 7231 documents) helps to visualize, examine and analyze the relationship, structure and evolutionary path of Crowdsourcing field (the nodes illustrate the connection between the papers co-cited, visualizing the main investigation themes).

Figure 2 shows that Howe (2006), Estelles-Arola and Gonzalez-Ladrón-De-Guevara (2012) and Afuah and Tucci (2012) lead the list of total link strength. Howe's (2006) was cited by 804 of the 7231 documents related to Crowdsourcing (the 4 entries of the same Howe´s work shown by the programme were grouped for a homogeneous comparison). Jeff Howe's work is the first document mentioning, conceiving and defining “crowdsourcing”, a fact that justifies its leadership in this ranking. Although Howe's (2006) is the most cited by our sample, it does not belong to this sample of 7231 documents (because it was published in a manazine not included in the WoS). The second most co-cited paper is Estelles-Arolas and González-Ladrón-De-Guevara (2012). It does belong to our sample of 7231 documents, and receives 397 citations from this sample (and 905 citations by the entire WoS). This paper extensively reviews the concept and definitions of Crowdsourcing in the literature, and proposes and validates an integrated crowdsourcing definition. The ranking is completed with Afuah and Tucci (2012), the fifth by number of citations (226 citations). This paper also focuses on crowdsourcing analysis with a managerial perspective.

These three previous papers lead the main cluster in green (Figure 2), with 37 items, of the four clusters shown. The cluster also includes Howe (2008) (the fourth most cited), and several references within the 20 most important, such as Doan et al. (2011), Bayus (2013), Poetz and Schreier (2012), Jeppesen and Lakhani (2010), Brabhan (2010), and Leimeister et al. (2009). This group focus on the theoretical analysis of the concept of Crowdsourcing and its definition, mainly with a management perspective. The second main cluster, in red, and with also 37 items, is led by Raykar et al. (2010), the ninth most cited paper with 184 cites. Most of these documents have a computational or technological view of crowdsourcing analysis. Buhrmester et al. (2011), the third more co-cited with 263 citations, leads the third cluster. This group, with blue color and 15 items, includes Paolacci et al. (2010), or Mason and Suri (2012), also in the top ranking of citations. These works mainly analyze Amazon Mechanical Turk: as a source of cheap and high-quality data from a psychological perspective in the first case; as a platform to conduct experiments from a decision-making perspective in the second case; or as a mechanism to carry out behavioral research in Mason and Sury's paper (2012).

Figure 2.

Co-citation of cited references on C (till 2022). 101 references. of the 212674 cited references. that meet the threshold of a minimum number of citations of a cited reference of 60

The cluster observes a behavioural- pshychological nature. Finally, diverse documents by Goodchild (specially Goodchild (2007), ranked seventh with 220 citations), lead the yellow cluster, which include 12 items. This group has an environmental-geographical-spatial perspective. For instance, Goodchild (2007) analyzes how various websites empower citizen volunteers to generate information and create applications that are then disseminated; furthermore, it compares this situation with citizen science.

Analysis of citation and cocitation of sources

Leading crowdsourcing areas and journals

The 7232 documents about Crowdsourcing were published in 2698 journals, in 228 areas. The main categories of publications were Computer Science Information Systems (1624 articles, representing 22.46% of total publications), Engineering Electrical Electronic (13.54%) and Telecommunications (10.77%). However, the areas of Management (503 articles, 6.96%) and Business (396 articles, 5.48%) occupy the fifth and seventh position.

Of the 2698 journals, only 136 reported more than 10 papers related to Crowdsourcing. 770 documents (10.75% of the papers) were published in the top 10 journals (Table 3). The three main journals where IEEE access, with 2.47% of the total publications, IEEE Internet of Things Journal (1.30%) and Sensors (1.15%). The H-Index for Crowdsourcing was led by IEEE Internet of Things Journal (30), IEEE access (28), and Plos One (25).

Table 3.

The top 32 journals with Crowdsourcing publications

R | Journal | APC | H.C | TAP | TCC | ACC | PCC | %APC | IF | ≥200 | ≥100 | ≥50 | ≥20 |

1 | IEEEA | 179 | 28 | 65271 | 2949 | 2736 | 16.47 | 0.27 | 3.90 |

| 1 | 3 | 28 |

2 | IEEEITJ | 94 | 30 | 5977 | 2536 | 2203 | 26.98 | 1.57 | 10.60 |

| 4 | 16 | 36 |

3 | S | 83 | 20 | 40006 | 1214 | 1126 | 14.63 | 0.21 | 3.90 |

|

| 7 | 20 |

4 | PO | 81 | 25 | 263496 | 2656 | 2549 | 32.79 | 0.03 | 3.70 | 1 | 4 | 12 | 30 |

5 | IIJGI | 65 | 17 | 4178 | 956 | 841 | 14.71 | 1.56 | 3.40 | 1 | 1 | 1 | 12 |

6 | S | 59 | 9 | 59019 | 359 | 337 | 6.08 | 0.10 | 3.90 |

|

|

| 4 |

7 | IEEETKD | 58 | 21 | 3020 | 1353 | 1021 | 23.33 | 1.92 | 8.90 |

| 2 | 7 | 22 |

8 | JMIR | 58 | 20 | 6352 | 1279 | 1117 | 22.05 | 0.91 | 7.40 |

| 2 | 5 | 20 |

9 | IEEETMC | 57 | 21 | 2880 | 1582 | 1368 | 27.75 | 1.98 | 7.90 | 1 | 2 | 9 | 21 |

10 | ESA | 36 | 14 | 15678 | 568 | 537 | 15.78 | 0.23 | 8.50 |

|

| 3 | 11 |

11 | PVLDBE | 33 | 15 | 2033 | 829 | 657 | 25.12 | 1.62 | 2.50 | 1 | 2 | 4 | 1 |

12 | SR | 32 | 13 | 166998 | 440 | 428 | 13.75 | 0.02 | 4.60 |

|

| 1 | 8 |

13 | IEEETITS | 31 | 18 | 5976 | 1025 | 953 | 33.06 | 0.52 | 8.50 |

| 1 | 8 | 8 |

14 | CHB | 29 | 17 | 6336 | 2279 | 2151 | 78.59 | 0.46 | 9.90 | 1 | 2 | 10 | 7 |

15 | FGCS | 29 | 12 | 4762 | 483 | 463 | 16.66 | 0.61 | 7.50 |

|

| 3 | 8 |

16 | CN | 28 | 11 | 4466 | 594 | 517 | 21.21 | 0.63 | 5.60 |

| 1 | 4 | 7 |

17 | IEEETCSS | 28 | 9 | 985 | 386 | 345 | 13.79 | 2.84 | 5.00 |

|

| 2 | 5 |

18 | RS | 28 | 15 | 25140 | 913 | 828 | 32.61 | 0.11 | 5.00 | 1 | 3 | 4 | 13 |

19 | WCMC | 28 | 8 | 6301 | 179 | 171 | 6.39 | 0.44 | 2.15 |

|

|

| 3 |

20 | BRM | 27 | 18 | 2299 | 8531 | 7281 | 315.96 | 1.17 | 5.40 | 8 | 9 | 11 | 18 |

21 | FP | 27 | 8 | 31788 | 361 | 358 | 13.37 | 0.08 | 3.80 |

| 1 | 3 | 3 |

22 | IEEETM | 27 | 14 | 3018 | 681 | 623 | 25.22 | 0.89 | 7.30 |

| 2 | 3 | 10 |

Source: Own elaboration based on WoS 2023. R: Ranking; H-C: indicates the H index in the area of Crowdsourcing; APC: Articles published in C.; TAP: Total Articles published (2008-2022); TCC: Total citations in C.: ACC: Articles in which is cited in C.; PCC. Average of cites by articles in C.. %APC: Percentage of articles published in C (APC/TAP); IF: Impact Factor; ≥200. ≥100. ≥50 and ≥20: articles with more of 200.100.50 and 20 citations. IEEEA: IEEE Access; IEEEITJ: IEEE Internet of Things Journal; S: Sensors; PO: Plos One; IIJGI: ISPRS International Journal of Geo Information; S: Sustainability; IEEETKD: IEEE Transactions on Knowledge and Data Engineering; JMIR: Journal of Medical Internet Research; IEEETMC: IEEE Transactions on Mobile Computing; ESA: Expert Systems with Applications; PVLDBE: Proceedings of the VLDB Endowment; SR: Scientific Reports; IEEETITS: Transactions on Intelligent Transportation Systems; CHB: Computers in Human Behavior; FGCS: Future Generation Computer Systems-The International Journal of Escience; CN: Computer Networks; IEEETCSS: IEEE Transactions on Computational Social Systems; RS: Remote Sensing; WCMC: Wireless Communications Mobile Computing; BRM: Behavior Research Methods; FP: Frontiers In Psychology; IEEETM: IEEE Transactions on Multimedia.

Among the 22 leading journals with most publications, the source with the highest percentage of articles published in 2008-2022 about Crowdsourcing was ACM Transactions on Intelligent Systems and Technology, with almost 3% of its publications dedicated to our field, followed by IEEE Transactions on Computational Social Systems (2.84%), and IEEE Transactions on Services Computing (2.23%). Focusing on citations per article published about Crowdsourcing, among this top 32 journals, the ranking was led by Behavior Research Methods (315.96 citations on average), Computers in Human Behavior (78.59), and Plos One (32.79).

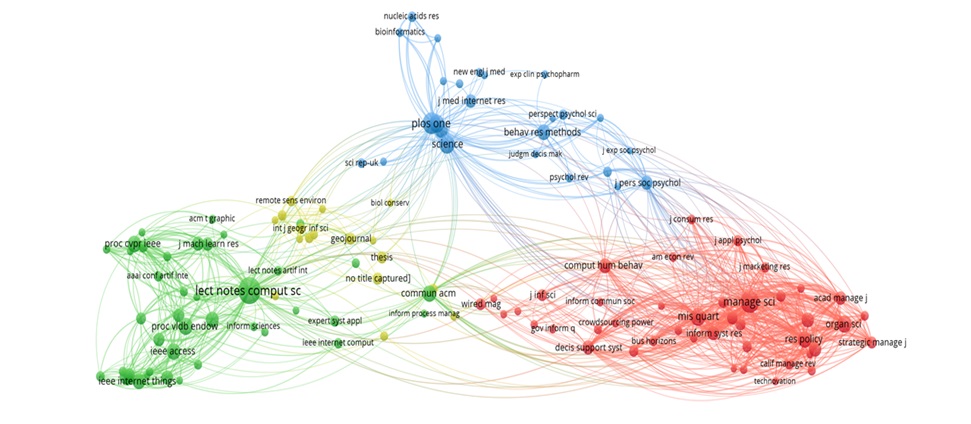

Journal co-citation analysis

This analysis indicates the simultaneous citation to two journals by a third document’s citation (Garrigos et al., 2018). The results (Figure 3) show four main clusters. The first one, with 43 items and in red, is led by Management Science, the second most cited (1897 citations) but first in link strength (55838), Miss Quarterly, the fifth most cited (1259) but second in link strenght (43995), or Organization Science (1141 citations, but the fourth in link strengh 41771). This group has a strong management orientation.

Figure 3.

Journal co-citation network on C. 127 main journals. of the 76291 cited sources by the 7.231 documents regarding TQ. which meet the threshold of a minimum number of citations of a cited source of 250

The second group, in green and with 42 items, is led by Lecture Notes in Computer Science, the most cited journal (2743) and third in link strength (42641). It also contains Communications of the ACM (1067 citations, 18155 link strength), Proc VLDB Endowment (1181 c., 16587 l.s.) and multiple sources from the IEEE group (such as IEEE Access (1049 c,19027 l.s.) or Proc CVPR IEEE (1044c., 25679 l.e.). This cluster is predominantly oriented to computer science and engineering. The third cluster, in blue and with 25 items, is led by Plos One, the third most cited (1865) but with only 26189 in link strength, Science (1332 c.,23227 l.s.), Nature (1217c., 20100l.s.), Behavior Research Methods (1000c., 11150l.s), or Journal of Personality and Social Psychology. Most of these sources relate with classical sciences, predominating fields of psychology and medicine. The last cluster, in yellow with 17 items, is composed of sources related to the areas of geography and environment, with sources relegated to secondary positions in the citation and link strength rankings.

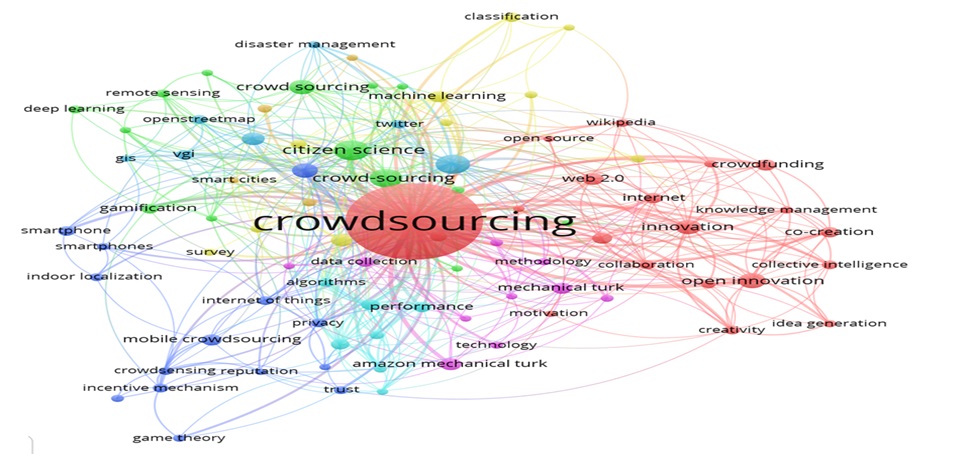

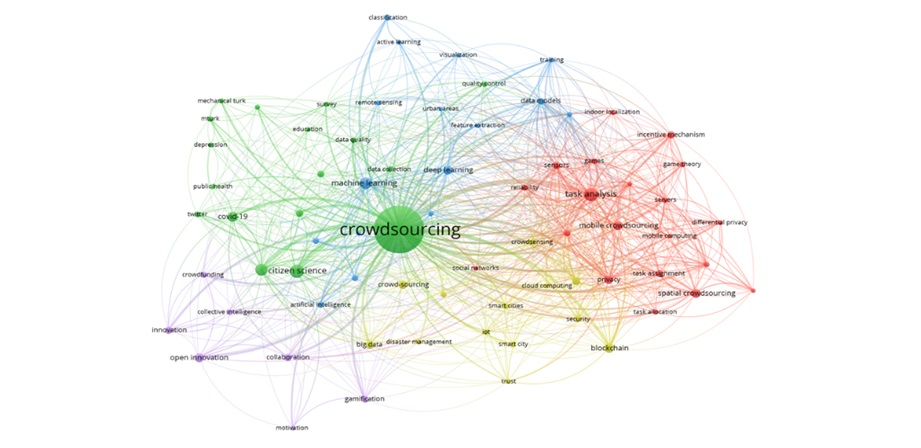

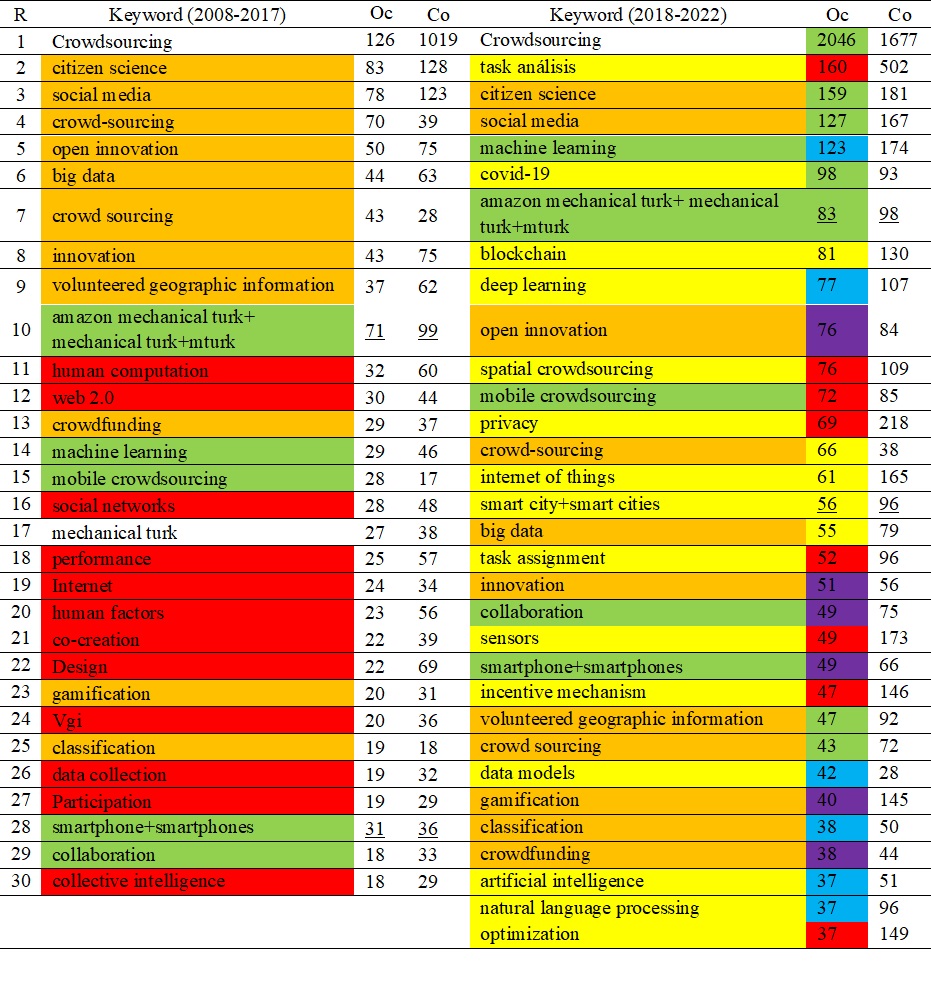

Analysis of “author keywords”

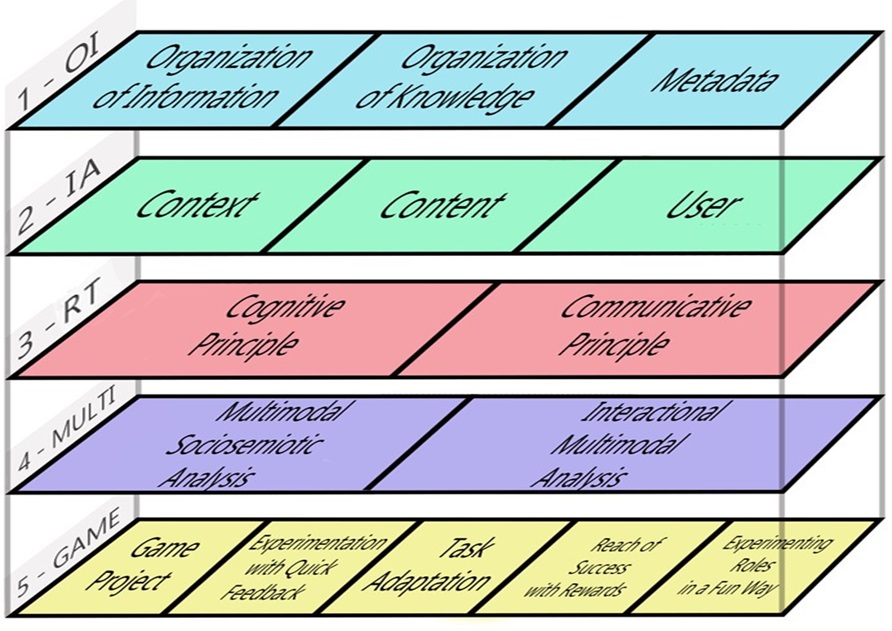

This epigraph considers the 2655 and 4676 publications (6461 and 12160 author keywords respectively) related to crowdsourcing in two periods: 2008-2017, 2018-2023.

Figure 4.1 illustrates the first period (2008-2017). The most common keyword, apart from "crowdsourcing", is "citizen science". “Citizen science”, along with "crowd-sourcing" and "crowd sourcing" (the fourth and seventh most important keywords) share the green cluster, the second most numerous with 14 items. The third main keyword is "social media", which leads the seventh largest group with 7 elements and light blue. This group includes "volunteered geographic information", the author's ninth keyword. In this period, the largest cluster, with 20 items is the red group, which includes “crowdsourcing”, and also "open innovation" and "innovation" (the fifth and eighth most important keywords). The third largest cluster is in dark blue, with 14 keywords including "big data" and "mobile crowdsourcing" (the sixth and fourteenth largest keywords) as the main representatives. The fourth group, with yellow color and 10 elements, and headed by "human computation", does not include any representative among the top 10 keywords. The fifth cluster (10 items) is led by "amazon mechanical turk", in pink. Although this keyword is the tenth, it would go up to the fourth position when adding "mechanical turk" and "mturk". The sixth group, in medium blue, with 7 elements, is led by "performance", and "human factor". Finally, an eighth residual group, in brown, is led by "participatory sensing”, out of our ranking.

Figure 4.1.

Co-ocurrence network of author keywords of Crowdsourcing- related publications The figure considers a threshold of nine occurrences, which shows the 86 keywords before 2018 with the most frequent co-ocurrences, of the 6461 keywords

Figure 4.2 shows the last period (2018-2022). The main keyword remains "crowdsourcing", followed now by “task analysis”, "citizen science" and "social media". The main group, with 20 items and red color, is headed by "task analysis”, the new second main keyword, and includes "spatial crowdsourcing", “mobile crowdsourcing”, “privacy”, “task assignment”, “sensors”, and “incentive mechanism”, the tenth, eleventh, twelveth, sixteenth, nineteenth and twentieth most important keyword. The second network, with 17 items and green colour, is led by "crowdsourcing", "citizen science” and “social media”, and includes also “covid 19” (the sixth top keyword), the group of “amazon mechanical turk” (including “mturk” and "mechanical turk"), and also “volunteer geographic information” and “crowd sourcing”. The network led by "machine learning” (the fifth new top keyword) is in third place, with 16 items and dark blue color, containing also “deep learning”, the eighth more relevant keyword, “data model”, “classification”, “artificial intelligence” or “natural language processing” among the most important keywords. “Blockchain”, the seventh more relevant keyword, leads the fourth cluster, with 13 items and yellow color, which also includes “crowd-sourcing”, “internet of things”, the group related to “smart city-smart cities”, and “big data” among the main keywords. Finally, "Open innovation”, the ninth top keyword, leads the fifth group, with 7 items and dark pink, and with keywords such as “innovation”, collaboration”, “gamification”, “crowdfunding” or “smartphone”.

Figure 4.2.

Co-ocurrence network of author keywords of C- related publications (2018-2022). The figure considers a threshold of twenty occurrences. which shows the 73 keywords with the most frequent co-ocurrences. of the 12160 keywords

Results show the change in the ranking of keywords, and thus the new trends in research. In Table 4 (which illustrate the top author keywords), although the three main initial keywords remain important, the rest ones undergo a profound transformation. Firstly, some relevant keywords such as “task análisis”, “covid 19” “blockchain”, “deep learning” or “spacial crowdsourcing, not only are new in the classification, but are also situated in the second period among the ten top keywords. Others such as “open innovation”, “big data”, “innovation”, or “volunteered geographic information” reduce their weight, going most of them far away from the beginning of the ranking. “Machine learning”, the group of “amazon mechanical turk”, “mobile computing” or "collaboration" increase significantly in the ranking. This trend is accompanied by the disappearance in the ranking of several keywords such as "human computation", "web 2.0", "human factor", "social networks" on one side, and “performance”, "internet", “cocreation”, "design", "data collection" or "participation" on the other side.

Table 4.

The top author keywords co-occurrence of crowdsourcing related publications

Source: Own elaboration based on WoS 2023. R: rank; Oc: author keyword occurrences; Co:author keyword co-occurrences link. In keyword colums; in Yellow color, the keywords that enter in the rank (there were not in the first period but were in the second), in green the ones that increase in the second period, in orange the ones that reduce their presence, and in red the ones that disappear of the rank in the second period. In the colum of occurrences, the colours indicate the various clusters

The previous second set of keywords disappears due to the transformation of Crowdsourcing literature, from the definition phase, to another where practical and methodological issues related to its application gain importance. This explains the entry in the list of "task assignment", (now placed in the second position) "privacy", “task asignment”, "incentive mechanisms" or “data model”, or the relevance of the “covid 19” in this period.

An in-depth analysis also points out the relevance of managing the implementation, and development of crowdsourcing processes within organizations. It also reveals management trends linked to the phases of strategic análisis (“task análisis” “open innovation”, “innovation”, “classification”) implementation and management of human resources ("task assignment", “collaboration”), or financial analysis (“optimization”); or the incorporation of perspectives coming from Planning and Economy, Geography (“smart city-smart cities”, “volunteered geographic information”), Psychology and Marketing (“incentive mechanisms”, "gamification"), Law or Ethics ("privacy") and even Finance ("crowdfunding").

The results also stress the paradigm shift in management literature related to information systems, till the "Web 3.0", where digital technologies support human cooperation and manipulate web services (Garrigos et al., 2012). This movement has a development with the evolution of the ubiquitous and pervasive web, where business intelligence will use the data automatically, linked to mobile applications and the development of new technologies. This change, is reflected in the increase of "mobile crowdsourcing" or "machine learning"; the new appearance of “blockchain”, “deep learning” and "spatial crowdsourcing" (all in the top ten of the second ranking), or “artificial intelligence” and “natural language processing”. It is also observed with "internet of things", or “smartphone-smartphones".

Authors Co-citation network and bibliographic coupling of authors

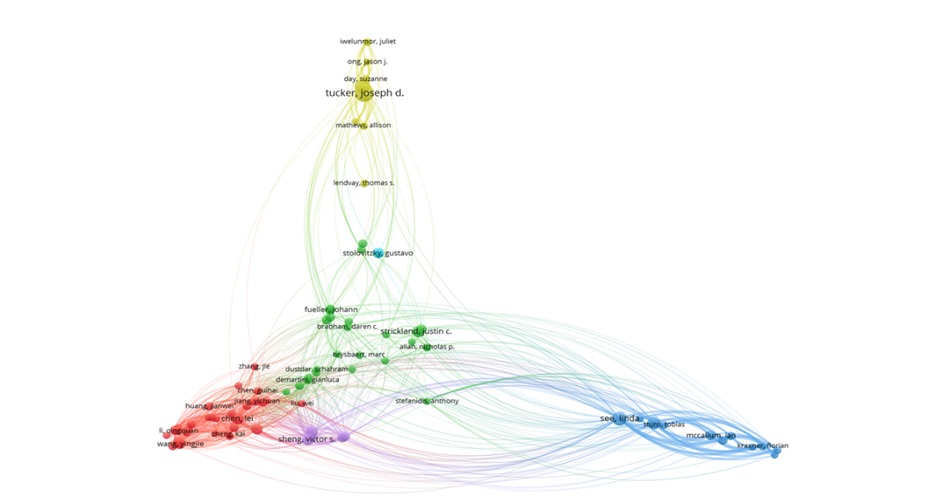

Figure 5 shows 3 clusters in the co-citation analysis of authors. The main group, in red and 43 items, is led by Tong, the eighth most cited author (311 citations), Zhang, and Raykar, already eleventh and thirteenth (with 266, and 261 citations respectively). It has a strong focus on computer science, with particular aspects such as spatial data science, data science or artificial intelligence. The second cluster, with 41 items and green colour, is led by three of the four authors with the most citations: Howe (993 citations), Brabham (595 citations) and Estelles-Arolas (421 citations), with Boudreau also standing out as the sixth most cited (355 citations). These researchers have a curriculum specialized in the area of management. The third cluster, in blue and with 19 items, is led by Von Ahn (361), Kittur (285) and Paolacci (267), all three among the 10 most cited, and Buhrmester (266) the eleventh most cited. These authors investigate aspects of behavioural- pshychological nature, focusing on human computation, human computing interaction or the specific use of Amazon Mechanical Turk. Finally, the yellow cluster, with 6 items, is led by Goodchild, the third most cited author (477 citations), and by Haklay, seventh with 335 citations. These researchers focus on geographic information, citizen science or environmental information aspects.

Figure 5.

Author co-citation network on C. 109 authors. of the 125407 cited authors. which meet the threshold of a minimum number of citations of a cited author of 100

The results of bibliographic coupling of authors are in Figure 6. The rank is led by See (14745 link strength, 29 documents), Fritz (12808 l.s., 23doc.) Mccallum (8663 l.s., 14doc.), Sheng (8659 l.s., 19doc.) Tucker (8337 l.s., 47doc.). There are 6 main clusters. The most important one, with 31 items in red colour, includes Chen, Li G., Wang, and Tong, 10th, 17th, 18th and 20th respectively in the rank. These authors specialise on computer science, with aspects such as spatial crowdsourcing, databases and mobile systems. The second cluster, with 25 items and green colour, includes Strickland, Brabham, or Leimeister, the mains areas combine behavioural pharmacology and psychological aspects, with other related mainly to management. The third one, with 11 items and light blue, includes the first three authors of the ranking, See, Fritz, and Mccallum, but also Perger, Schepaschenco, Kraxner (seventh, eighth and ninth), or Obsersteiner, Schill, and Sturn, all among the main 16 of the ranking. The main research áreas of these authors are related to environment and geopgraphic information, from perspectives on applied system analysis, with topics such as land cover, land use or ecology. The fourth cluster, with 8 items in yellow, includes Tucker and Tang, the fifth and twelfth in our ranking, observing a perspective focused on health and medical research. The fifth cluster, with 5 items and violet colour, is led by Sheng, the fourth in our list, and Zhang, Li., Jiang, and Wu, ranked 6th, 11th, 14th and 19th. The main research areas are machine learning, data minging and deep learning, closely related to the first cluster, as seen in figure 6, but with specific inferences within the same area of computing and engineering. The sixth cluster, with blue colour and two items, in the centre of figure, includes Stolovitzky as the main representative, outside the top 20 authors. These authors focus on clinical and biomedical research.

Figure 6.

Bibliographic coupling of authors. 82 authors. of the 19910 authors. with a which meet the threshold of a minimum number of documents of an author of 8

Discussion and conclusion

This paper has analyzed the relevance of Crowdsourcing, and its fundamental characteristics. The work has also observed its main advantages, and confirmed the important growth and diverse evolution of the concept. Moreover, the paper has reviewed the crowdsourcing literature through bibliometric methodology to analyse the field. The results helped to: analyze, structure and visualize the knowledge about crowdsourcing; study its state of art and evolution; to glimpse the perspectives that observe this field; observe practical applications and uses and offer possible developments; and improve implementations or avoid problems associated with them.

The work provides various general results:

Crowdsourcing is a complex phenomenom since its origins (Cricelli et al., 2022). Debates, discourses and critiques of the term “Crowdsourcing” have been constant (Brabham, 2008; Garrigos et al., 2017), and they continue with authors who point to various shortcomings (Kietzmann, 2017, Cappa et al., 2019, Schenk et al., 2019). After a review of the literature, Crowdsourcing is defined in this work as "the action of taking a specific task or job, whether or not previously performed by an employee of an organization or by a designated agent (such as a contractor, an external worker or a supplier), and subcontracting it, through an “open call” to a large group of people (inside or outside the organization), a community or the general public via the Internet, for compensation that does not have to be financial". Our work underlines the complexity of the process, indicates its principal characteristics, and shows the interdisciplinary nature of the research in Crowdsourcign.

Crowdsourcing is now regarded as a powerful tool for organizations, and a popular research topic (Karachiwalla & Pinkow, 2021; Lenart-Gansiniec et al., 2022). Crowdsourcing is theoretically and practically, important because it implies a paradigm shift in the conception and evolution of organizations and e-commerce in general, and also a change in the way of developing the various tasks and functions to be performed by organizations. Crowdsourcing has multiple advantages for the improvement competitiveness and efficiency: cost reductions, quality improvements, higher speed of creation and development of processes, innovations, improvements in power over other agents, the effect of developing distinctive competencies or even improvements associated with changes in organizational behavior and various effects on participant motivation (Lebraty & Lebraty, 2013; Blohm et al., 2018; Wang et al., 2019).

Research on Crowdsourcing is recent, and still limited. However, the trends of globalization and internationalization, and the technological advances, have created an environment that favors its growth. Our results corroborate its importance, the explosive growth in recent years, the impact of the published works in the field, the wide number of citations, and the relevance and quality of the authors dedicated to the subject. The important implications of these facts create the qualitative basis that underlines its tremendous potential. Moreover, our results have important theoretical and practical implications, that we develop next

Theorical implications

The incipience and explosive growth of crowdsourcing literature, and its important implications, stress the high potential for future research. Nevertheless, further research has to consider the state of the art and development of the literature, the most important fields and themes, and their potentialities.

Our results indicate that literature of crowdsourcing is changing and evolving: 1) From general conceptual and theoretical works, towards specific analyses of new and concrete uses, its implementation and management inside organizations. 2) From initial theoretical research, towards works more applied and empirical. The empirical research has started mainly with "case studies". However, there is still a lack of methodological or empirical works. The work shows the difficulty of developing these investigations due to the lack or still limited data to study the crowdsourcing processes from a more statistical point of view. It also shows the necesity for new indicators to measure and observe the benefits and problems of the area and its impact on organizations.

Results reveal the main fields obverving crowdsourcing, their evolution, the connections between the various areas of study and application of crowdsourcing, indicating new and growing conceptions of analyses that can open up new areas of research. The clasical areas of research on the topic are Management, Computer Science, and secondly Geography and Psychology, with a diferent maturity. Other areas are in an incipient but promising stage:

The managerial perspective (specially related to Information Systems) is highlighted by almost all analyses done. Nevertheless, the impact of the works published in the area of management (citation, co-citation) is greater than the amount of works developed. We also detect a lagging process in the evolution of the literature of management compared to the global crowdsourcing literature, both in this number of articles and in the topics observed. Results stress developments within the management literature of áreas linked to Open Innovation and social media. The trend also confirms the development of models in organizations (Täuscher, 2017), and a progressive emphasis on strategic analysis, specially towards the processes of implementation of Crowdsourcing within organizations (opposed to previous conceptual research). This implementation is charactericed by a focus on more efficient crowdsourcing processes, (against previous announcements of generic ideas and general advantages), and the emphasis on human capital management in the process of organizational implementation. This is evidenced by the growing relevance of keywords related to human resource management, financial analysis, and internal management procedures. The results also show the growth of new technological perspectives associated with the management of the ubiquitous web, collaboration and multimedia management; or the expansion of areas close to Management (Economics, Geography, Psychology, Marketing, Law, Ethics or Finance). Results also suggest the need for future development of applications of crowdsourcing in multiple value chain tasks (Garrigos et al., 2017), a greater focus on "incentive mechanisms" (not only extrinsic (Lebraty & Lebraty, 2013)), motivation and ethical processes in crowd management (Blohm et al., 2018; Fieseler et al., 2019), and the inclusion of perspectives from various disciplines (Wang et al., 2019) for managing crowdsourcing processes.

The work stresses the relevance of technological approaches. This is observed in co-cited references and keywords analyses; with "Computer Science Information Systems" as the area with most work published, or "Lecture Notes in Computer Science" as the most co-cited journal. Results observe the impact of the evolution of information system processes on crowdsourcing literature. A change from traditional perspectives (related to "data collection", “human factors” and “human computation”) towards the framework of the ubiquitous web (linked to "machine learning", "mobile crowdsourcing", and “smart phones”, with new perspectives entering strongly (“Blockchain”, “deep learning”, “internet of things”, “artificial intelligence”)) which is transforming the management and electronic-commerce field. Results highlights the growing participation of "non-human" authors (Kietzmann, 2017) in crowdsourcing mechanisms, and a change towards more technological innovations in the management of organizations. They also show the increase of specific technological studies, and the reduction of generic processes as a whole analyzing technology and data mining. Moreover, research on mobile devices are gaining importance (mobile computer-related journals in journal co-citation analysis) along with aspects related to location, sensor networks and information processing (observed specially by Chinese authors or some relevant journal). The results also indicate developments in areas related to image and multimedia (multimedia informatics and communications, image collection, video technology and multimedia).

Other important areas are Geography and Psychology. The geopraphical perspective is corroborated with the main keywords observed ("citizen science" (mainly observing a geographical perspective), "spatial crowdsourcing", or "volunteered geographic information"); the high citation of Dickinson et al. (2010), (focused on geographic information); the high the co-citation of Goodchild (2007) which leads a cluster of important works; or with the relevance of “ISPRS International Journal of Geo Information”.

Psychological and behavioral science view have mainly focused on the applied study of Crowdsourcing to the "Mechanical Turk" of Amazon (relevant keywords). This perspective is also stressed with the high citation of Mason and Sury (2012); the relevance of "Behaviour Research Methods" and "Computers in Human Behaviour", (leading citations per article published). Results show the potential of geographic and environmental targeting, including issues related to transport or tourism (possible new emerging development areas), the relevance of Behavioral Science, and the emergence of issues about Cognitive Psychology, Social Informatics and Reputation Analysis, being incipient and possible sources of development for Crowdsourcing

Crowdsourcing is also analyzed in a wide variety of disciplines with diverse approaches (Ghezzi et al., 2018). Citation and cocitation analyses indicate the relevance of Crowdsourcing in Biology (Yanez-Mó et al., 2015), Nanotechnology (Vance et al., 2015), Medicine, or human sciences; and even in virgin areas such as Languages, Communication, Engineering, Mathematics, Physics or Chemistry (observed journal analyses: number of publications in “IEEE” journals, "Plos One", or "Journal of Medical Internet Research"; or cocitation of “Plos One”, "Science” and “Nature".

To sum up, the dispersion of works in very diverse scientific areas, the development of the literatures of managemet and technology, and the greater relevance of various keyword indicates an important potential development of the field. Moreover, the existence of "trendy" topics in the literature, may drag the development of future research (authors tend to publish in areas where there are more citations while publishers will influence the publication of works with more potential for citations).

Practical implications

Different professionals are faced with possible development of various polices and processes in their organizations. The diverse perspectives observed help to better understand the area for further innovations. Crowdsourcing can be applied to any industry, sector or field of business (Blohm et al., 2018). It offers multiple possibilities of use, both for the creation of new business models (Brabham, 2008; Peng & Zhang, 2010) and for the improvement of their management (see Täuscher, 2017). Crowdsourcing can be used through different Crowdsourcing schemes or typologies (Kleemann et al., 2008; Brabham 2013) (crowdvoting, creative crowdsourcing, microworking, crowdsource workforce management (Garrigos et al., 2017) or crowdfunding (Brown et al., 2017; Paschen, 2017). It has various possibilities and different uses in multiple processes of the value chain (Schenk & Guittard, 2011; Garrigos et al., 2012, 2014, 2015, 2017; Zahai et al. 2018), including tasks not previously considered by the companies (Kietzmann, 2017), essentially relating to "open innovation" processes. One must consider the development of the crowdsourcing process, with problems related to "task analysis”, the selection of the contributors in the crowd (“task asighment”), and the “collaboration”. The result note that the selection process, the "privacy" or the poor design of crowdsourcing processes may limit its potentialities.

Other implementation issues, especially ethical ones, are associated with the remuneration of the crowd (Bañon-Gomis et al., 2015), or risks related to the "dark face" of crowdsourcing (Wilson et al., 2017). Managers should consider the sustainability of the actions developed (Garrigos et al., 2018), policies creating social value, or various benefits associated with social entrepreneurship (Narangajavana et al., 2016), with ethically conscious actions from social, environmental, cultural and economic perspectives. Furthermore, new conceptions of Crowdsourcing should look at the non-human participation in the process (Kietzmann, 2017), through new technologies associated with the development of Web 3.0 (Garrigos et al., 2012), or specifically the ubiquitous and pervasive web, as observed in the theoretical implications. Practitioners should also consider the progress, effects and impact that changes in information technology can have on the development of these processes (Boudreau & Lakhani, 2013), (for example, the impact of social media and mobile platforms observed) or the availability of new management and planning techniques, technological solutions or different open Crowdsourcing or innovation solutions, explained by Certoma et al. (2015). The future Crowdsourcing must be conceive dynamic and multilevel processes, which observe diverse perspectives and continuous advances in the area (coming essentially from technology and management, but also from psychology and behavioural sciences), through a continuous redefinition of the challenges of the process and its potential to provide adequate responses to economic, social, scientific, environmental, technological, and even psychological and ethical situations (especially those related to motivators (Geiger et al., 2011) and the fair relationship with participants (Fieseler et al., 2019).

Limitations and future research

The limited scope of this document reveals limitations that may open further analyses. The incipient importance, expansion and growth of Crowdsourcing, and especially the still scarce literature in some important scientific fields (with relevant potential, or of great popularity), show deficiencies in research. Further analyses could focus on some of the trends identified in this work, or could analyze the progress in the field considering and studying it from various and more specific scientific points of view (Management, Computer Science, Geography, etc.). Focusing on management and electronic commerce, the development and peculiarities of Crowdsourcing in some fields related (Marketing, Finance…), or the management and use of Social Media and intelligent technologies should be more deeply studied. Inside the Management fiel, interesting reseach should consider Knowledge Management and Intellectual Capital, Open Innovation, the use of new systems and technological processes associated with the ubiquitous web, Entrepreneurship and New Business Models, Ethics or Social Responsibility, among others. Furthermore, our results highlight the progressive maturity of the literature on Crowdsourcing and the tendency to produce more empirical articles, as well as the need for the development of measurement indicators.

The bibliometric methodology also has limitations if not complemented by a more qualitative analysis, as we tried to observe in this document with a detailed analysis of the data. Moreover, we used the WoS Core Collection database as a source of data, considering only articles, reviews, letters and notes. Other works could analyze other types of secondary documents included in this database, use other databases (Scopus, Google Scholar), other sources and datasets (theses or doctoral papers), or databases using other languages. In addition, other tools or software can provide analyses that complement this work. Finally, the study could be completed by refining the analysis with a more in-depth and detailed study of some of the groups and issues identified.

References

Afuah, A., & Tucci, C. L. (2012). Crowdsourcing as a solution to distant search. Academy of Management Review, 37(3), 355-375. http://dx.doi.org/10.5465/amr.2010.0146

Ågerfalk, P. J., & Fitzgerald, B. (2008). Outsourcing to an unknown workforce: Exploring opensourcing as a global sourcing strategy. MIS Quarterly, 32, 385-409. https://doi.org/10.2307/25148845

Albors, J., Ramos, J. C., & Hervas, J. L. (2008). New learning network paradigms: Communities of objectives, crowdsourcing, wikis and open source. International Journal of Information Management, 28(3), 194-202. http://dx.doi.org/10.1016/j.ijinfomgt.2007.09.006

Ali, I., Balta, M., & Papadopoulos, T. (2023). Social media platforms and social enterprise: Bibliometric analysis and systematic review. International Journal of Information Management, 69, 102510. http://dx.doi.org/10.1016/j.ijinfomgt.2022.102510

Bañón-Gomis, A. J., Martínez-Cañas, R., & Ruiz-Palomino, P. (2015). Humanizing Internal Crowdsourcing Best Practices. In F. Garrigos, I. Gil, & S. Estelles (Eds.), Advances in Crowdsourcing (pp. 105-117). Cham: Springer. http://dx.doi.org/10.1007/978-3-319-18341-1_9

Bayus, B. L. (2013). Crowdsourcing new product ideas over time: An analysis of the Dell IdeaStorm community. Management Science, 59(1), 226-244. http://dx.doi.org/10.1287/mnsc.1120.1599

Blanco-Mesa, F., Merigó, J. M., & Gil-Lafuente, A. M. (2017). Fuzzy decision making: A bibliometric-based review. Journal of Intelligent & Fuzzy Systems, 32(3), 2033-2050. http://dx.doi.org/10.3233/JIFS-161640

Blohm, I., Zogaj, S., Bretschneider, U., & Leimeister, J. M. (2018). How to manage crowdsourcing platforms effectively? California Management Review, 60(2), 122-149. http://dx.doi.org/10.1177/0008125617738255

Boudreau, K. J., & Lakhani, K. R. (2013). Using the crowd as an innovation partner. Harvard Business Review, 91(4), 60-69.

Brabham, D. C. (2008). Crowdsourcing as a model for problem solving: An introduction and cases. Convergence: International Journal of Reasearch into New Media Technologies, 14(1), 75-90. https://doi.org/10.1177/1354856507084420

Brabham, D. C. (2013). Crowdsourcing. Cambridge, MA: MIT Press.

Broadus, R. N. (1987). Toward a definition of “bibliometrics”. Scientometrics, 12(5-6), 373-379. https://doi.org/10.1007/BF02016680

Brown, T. E., Boon, E., & Pitt, L. F. (2017). Seeking funding in order to sell: Crowdfundung as a marketing tool. Business Horizons, 60(2), 189-195. https://doi.org/10.1016/j.bushor.2016.11.004

Brysbaert, M., Warriner, A. B., & Kuperman, V. (2014). Concreteness ratings for 40 thousand generally known English word lemmas. Behavior Research Methods, 46, 904-911. https://doi.org/10.3758/s13428-013-0403-5

Buhrmester, M., Kwang, T., & Gosling, S. D. (2011). Amazon's Mechanical Turk: A new source of inexpensive, yet high-quality, data? Perspectives on Psychological Science, 6(1), 3-5. https://doi.org/10.1177/1745691610393980

Cancino, C., Merigó, J. M., Coronado, F., Dessouky, Y., & Dessouky, M. (2017). Forty years of Computers & Industrial Engineering: A bibliometric analysis. Computers & Industrial Engineering, 113, 614-629. http://dx.doi.org/10.1016/j.cie.2017.08.033

Cappa, F., Oriani, R., Pinelli, M., & De Massis, A. (2019). When does crowdsourcing benefit firm stock market performance? Research Policy, 48(9), 103825. https://doi.org/10.1016/j.respol.2019.103825

Casler, K., Bickel, L., & Hackett, E. (2013). Separate but equal? A comparison of participants and data gathered via Amazon’s MTurk, social media, and face-to-face behavioral testing. Computers in Human Behavior, 29(6), 2156-2160.https://doi.org/10.1016/j.chb.2013.05.009

Certoma, C., Corsini, F., & Rizzi, F. (2015). Crowdsourcing urban sustainability. Data, people and technologies in participatory governance. Futures, 74, 93-106. http://dx.doi.org/10.1016/j.futures.2014.11.006

Chandler, J., & Shapiro, D. (2016). Conducting clinical research using crowdsourced convenience samples. Annual Review of Clinical Psychology, 12, 53-81. https://doi.org/10.1146/annurev-clinpsy-021815-093623

Cricelli, L., Grimaldi, M., & Vermicelli, S. (2022). Crowdsourcing and open innovation: a systematic literature review, an integrated framework and a research agenda. Review of Managerial Science, 16(5), 1269-1310.https://doi.org/10.1007/s11846-021-00482-9

Crump, M. J., McDonnell, J. V., & Gureckis, T. M. (2013). Evaluating Amazon's Mechanical Turk as a tool for experimental behavioral research. Plos One, 8(3), e57410. https://doi.org/10.1371/journal.pone.0057410

Delgado López-Cózar, E., Robinson-García, N., & Torres-Salinas, D. (2014). The Google Scholar experiment: How to index false papers and manipulate bibliometric indicators. Journal of the Association for Information Science and Technology, 65(3), 446-454. https://doi.org/10.1002/asi.23056

Dickinson, J. L., Zuckerberg, B., & Bonter, D. N. (2010). Citizen science as an ecological research tool: challenges and benefits. Annual Review of Ecology and Systematics, 41(1), 149-172. http://doi.org/10.1146/annurev-ecolsys-102209-144636

Diem, A., & Wolter, S. C. (2013). The use of bibliometrics to measure research performance in education sciences. Research in Higher Education, 54(1), 86-114. http://dx.doi.org/10.1007/s11162-012-9264-5

Doan, A., Ramakrishnan, R., & Halevy, A. Y. (2011). Crowdsourcing systems on the world-wide web. Com ACM, 54(4), 86-96. https://doi.org/10.1145/1924421.1924442

Estelles-Arolas, E., & González-Ladrón-De-Guevara, F. (2012). Towards an integrated crowdsourcing definition. Journal of Information Science, 38(2), 189-200. http://dx.doi.org/10.1177/0165551512437638

Fieseler, C., Bucher, E., & Hoffmann, C. P. (2019). Unfairness by design? The perceived fairness of digital labor on crowdworking platforms. Journal of Business Ethics, 156(4), 987-1005. https://doi.org/10.1007/s10551-017-3607-2

Garrigos-Simon, F. J., Galdon, J. L., & Sanz-Blas, S. (2017). Effects of crowdvoting on hotels: the Booking.com case. International Journal of Contemporary Hospitality Management, 29(1), 419-437. http://dx.doi.org/10.1108/IJCHM-08-2015-0435

Garrigos-Simon, F. J., Lapiedra Alcami, R., & Barbera Ribera, T. (2012). Social networks and Web 3.0: their impact on the management and marketing of organizations. Management Decision, 50(10), 1880-1890. http://dx.doi.org/10.1108/00251741211279657

Garrigos-Simon, F. J., Narangajavana, Y., & Galdón-Salvador, J. L. (2014). Crowdsourcing as a competitive advantage for new business models. In I. Gil, D. Palacios, M. Peris, E. Vendrell, & C. Ramirez (Eds.), Strategies in E-business (pp. 29-37). Boston, MA: Springer. http://doi.org/10.1007/978-1-4614-8184-3_3

Garrigos-Simon, F. J., & Narangajavana, Y. (2015). From Crowdsourcing to the Use of Masscapital. The Common Perspective of the Success of Apple, Facebook, Google, Lego, TripAdvisor, and Zara. In F Garrigos, I Gil, S Estelles (Eds.) Advances in crowdsourcing (pp. 1-13). Cham: Springer. http://doi.org/10.1007/978-3-319-18341-1_1

Garrigos-Simon, F. J., Narangajavana-Kaosiri, Y., & Lengua-Lengua, I. (2018). Tourism and Sustainability: A Bibliometric and Visualization Analysis. Sustainability, 10(6), 1-23.http://dx.doi.org/10.3390/su10061976

Geiger, D., Seedorf, S., Schulze, T., Nickerson, R. C., & Schader, M. (2011). Managing the Crowd: Towards a Taxonomy of Crowdsourcing Processes. In Americas Conference on Information Systems, August 4th-7th (pp. 1-11). Detroit, Michigan.

Ghezzi, A., Gabelloni, D., Martini, A., & Natalicchio, A. (2018). Crowdsourcing: a review and suggestions for future research. International Journal of Management Reviews, 20(2), 343-363.http://dx.doi.org/10.1111/ijmr.12135

Goodchild, M. F. (2007). Citizens as sensors: the world of volunteered geography. GeoJournal, 69(4), 211-221. https://doi.org/10.1007/s10708-007-9111-y

Hirsch, J. E. (2005). An index to quantify an individual’s scientific research output. Proceedings of the National Academy of Sciences of the United States of America, 102(46), 16569-16572. https://doi.org/10.1073/pnas.0507655102

Howe, J. (2006). The rise of crowdsourcing. Wired Magazine, 14(6), 1-4. http://www.wired.com/wired/archive/14.06/crowds.html

Howe, J. (2008). Crowdsourcing: How the power of the crowd is driving the future of business. Random House.

Jeppesen, L. B., & Lakhani, K. R. (2010). Marginality and problem-solving effectiveness in broadcast search. Organization Science, 21(5), 1016-1033. http://dx.doi.org/10.1287/orsc.1090.0491

Jiang, Y., Guo, B., Zhang, X., Tian, H., Wang, Y., & Cheng, M. (2023). A bibliometric and scientometric review of research on crowdsourcing in smart cities. IET Smart Cities, 5(1), 1-18.https://doi.org/10.1049/smc2.12048

Karachiwalla, R., & Pinkow, F. (2021). Understanding crowdsourcing projects: A review on the key design elements of a crowdsourcing initiative. Creativity and Innovation Management, 30(3), 563-584. https://doi.org/10.1111/caim.12454

Kessler, M. M. (1963). Bibliographic coupling between scientific papers. The Journal of the Association for Information Science and Technology, 14(1), 10-25. https://doi.org/10.1002/asi.5090140103

Kietzmann, J. H. (2017). Crowdsourcing: A revised definition and an introduction to new research. Business Horizons, 60(2), 151-153. http://dx.doi.org/10.1016/j.bushor.2016.10.001

Kleemann, F., Voß, G. G., & Rieder, K. M. (2008). Un(der)paid innovators: The commercial utiliza-tion of consumer work through crowdsourcing. Sc Tech Inn Stud, 4(1), 5-26.http://dx.doi.org/10.17877/DE290R-12790

Krishna, R., Zhu, Y., Groth, O., Johnson, J., Hata, K., Kravitz, J., Chen, S., Kalantidis, Y., Li, L., Shamma, D. A., Bernstein, M. S., & Fei-Fei, L. (2017). Visual genome: Connecting language and vision using crowdsourced dense image annotations. International Journal of Computer Visoin, 123, 32-73. https://doi.org/10.1007/s11263-016-0981-7

Lebraty, J. F., & Lobre-Lebraty, K. (2013). Crowdsourcing: One step beyond. John Wiley & Sons. https://doi.org/10.1002/9781118760765

Lehmann, J., Isele, R., Jakob, M., Jentzsch, A., Kontokostas, D., Mendes, P. N., ... & Bizer, C. (2015). Dbpedia–a large-scale, multilingual knowledge base extracted from wikipedia. Semantic Web, 6(2), 167-195. https://doi.org/10.3233/SW-140134

Leimeister, J. M., Huber, M., Bretschneider, U., & Krcmar, H. (2009). Leveraging crowdsourcing: activation-supporting components for IT-based ideas competition. Journal of Management Information Systems, 26(1), 197-224.http://doi.org/10.2753/MIS0742-1222260108

Lenart-Gansiniec, R., Czakon, W., Sułkowski, Ł., & Pocek, J. (2022). Understanding crowdsourcing in science. Review of Managerial Science, 17(8), 1-34. http://dx.doi.org/10.1007/s11846-022-00602-z

Liao, H., Tang, M., Luo, L., Li, C., Chiclana, F., & Zeng, X. J. (2018). A bibliometric analysis and visualization of medical big data research. Sustainability, 10(1), 166. https://doi.org/10.3390/su10010166

Liao, P., Wan, Y., Tang, P., Wu, C., Hu, Y., & Zhang, S. (2019). Applying crowdsourcing techniques in urban planning: A bibliometric analysis of research and practice prospects. Cities, 94, 33-43. https://doi.org/10.1016/j.cities.2019.05.024

Litman, L., Robinson, J., & Abberbock, T. (2017). TurkPrime. com: A versatile crowdsourcing data acquisition platform for the behavioral sciences. Behavior Research Methods, 49(2), 433-442. https://doi.org/10.3758/s13428-016-0727-z

López-Meneses, E., Vázquez-Cano, E., & Román, P. (2015). Analysis and Implications of the Impact of MOOC Movement in the Scientific Community: JCR and Scopus (2010-13). Comunicar, 22(44), 73-80. https://doi.org/10.3916/C44-2015-08

Malik, B. A., Aftab, A., & Alí, N. (2019). Mapping of Crowdsourcing Research A Bibliometric Analysis. DESIDOC Journal of Library & Information Technology, 39(1), 23-30. https://doi.org/10.14429/djlit.39.1.13630

Mason, W., & Suri, S. (2012). Conducting behavioral research on Amazon’s Mechanical Turk. Behavior Research Methods, 44(1), 1-23. https://doi.org/10.3758/s13428-011-0124-6

Merigo, J. M., Gil-Lafuente, A. M., & Yager, R. R. (2015). An overview of fuzzy research with bibliometric indicators. Applied Soft Computing, 27, 420-433. https://doi.org/10.1016/j.asoc.2014.10.035

Merigo, J. M., & Yang, J. B. (2017). Accounting research: A bibliometric analysis. Australian Accounting Review, 27(80), 71-100. https://doi.org/10.1111/auar.12109

Mohammad, S. M., & Turney, P. D. (2013). Crowdsourcing a word–emotion association lexicon. Computational Intelligence, 29(3), 436-465.https://doi.org/10.1111/j.1467-8640.2012.00460.x

Narangajavana, Y., Gonzalez-Cruz, T., Garrigos-Simon, F. J., & Cruz-Ros, S. (2016). Measuring social entrepreneurship and social value with leakage. Definition, analysis and policies for the hospitality industry. International Entrepreneurship and Management Journal, 12(3), 911-934.https://doi.org/10.1007/s11365-016-0396-5

Oran, D. P., & Topol, E. J. (2020). Prevalence of asymptomatic SARS-CoV-2 infection: a narrative review. Annals of Internal Medicine, 173(5), 362-367. https://doi.org/10.7326/m20-3012

Osareh, F. (1996). Bibliometrics, citation analysis and co-citation analysis: A review of literature I. Libri: International Journal of Libraries and Information Studies, 46(3), 221-225.http://dx.doi.org/10.1515/libr.1996.46.3.149

Paolacci, G., Chandler, J., & Ipeirotis, P. G. (2010). Running experiments on Amazon mechanical turk. Judgment and Decision Making, 5(5), 411-419.https://doi.org/10.1017/S1930297500002205

Paschen, J. (2017). Choose wisely: Crowdfunding through the stages of the startup life cycle. Business Horizons, 60(2), 179-188. https://doi.org/10.1016/j.bushor.2016.11.003

Peer, E., Brandimarte, L., Samat, S., & Acquisti, A. (2017). Beyond the Turk: Alternative platforms for crowdsourcing behavioral research. Journal of Experimental Social Psychology, 70, 153-163.https://doi.org/10.1016/j.jesp.2017.01.006

Peer, E., Vosgerau, J., & Acquisti, A. (2014). Reputation as a sufficient condition for data quality on Amazon Mechanical Turk. Behavior Research Methods, 46, 1023-1031.https://doi.org/10.3758/s13428-013-0434-y

Peng, L., & Zhang, M. (2010). An empirical study of social capital in participation in online crowdsourcing. International Journal on Advances in Information Sciences and Service Sciences, 5(4), 1-4.http://dx.doi.org/10.1109/ICEEE.2010.5660804

Poetz, M. K., & Schreier, M. (2012). The value of crowdsourcing: Can users really compete with professionals in generating new product ideas? Journal of Product Innovation Management, 29(2), 245-256. http://dx.doi.org/10.1111/j.1540-5885.2011.00893.x

Raykar, V. C., Yu, S., Zhao, L. H., Valadez, G. H., Florin, C., Bogoni, L., & Moy, L. (2010). Learning from crowds. Journal of Machine Learning Research, 11, 1297-1322.

Schenk, E., & Guittard, C. (2011). Towards a characterization of crowdsourcing practices. Journal of Innovation Economics & Management, 1(7), 93-107. http://dx.doi.org/10.3917/jie.007.0093

Schenk, E., Guittard, C., & Pénin, J. (2019). Open or proprietary? Choosing the right crowdsourcing platform for innovation. Technological Forecasting and Social Change, 144, 303-310.http://dx.doi.org/10.1016/j.techfore.2017.11.021

Small, H. (1973). Co‐citation in the scientific literature: A new measure of the relationship between two documents. Journal of the American Society for Information Science, 24(4), 265-269. http://dx.doi.org/10.1002/asi.4630240406

Täuscher, K. (2017). Leveraging collective intelligence: How to design and manage crowd-based business models. Business Horizons, 60(2), 237-245. https://doi.org/10.1016/j.bushor.2016.11.008

Vance, M. E., Kuiken, T., Vejerano, E. P., McGinnis, S. P., Hochella, M. F., Rejeski, D., & Hull, M. S. (2015). Nanotechnology in the real world: Redeveloping the nanomaterial consumer products inventory. Beilstein Journal of Nanotechnology, 6, 1769-1780.https://doi.org/10.3762/bjnano.6.181

Wang, L., Xia, E., Li, H., & Wang, W. (2019). A bibliometric analysis of crowdsourcing in the field of public health. International Journal of Environment Research and Public Health, 16(20), 3825. https://doi.org/10.3390/ijerph16203825

Wang, S., Tuor, T., Salonidis, T., Leung, K. K., Makaya, C., He, T., & Chan, K. (2019). Adaptive federated learning in resource constrained edge computing systems. IEEE Journal on Selected Areas In Communications, 37(6), 1205-1221. https://doi.org/10.1109/JSAC.2019.2904348

Warriner, A. B., Kuperman, V., & Brysbaert, M. (2013). Norms of valence, arousal, and dominance for 13,915 English lemmas. Behavior Research Methods, 45, 1191-1207.https://doi.org/10.3758/s13428-012-0314-x

Wilson, M., Robson, K., & Botha, E. (2017). Crowdsourcing in a time of empowered stakeholders: Lessons from crowdsourcing campaigns. Business Horizons, 60(2), 247-253. https://doi.org/10.1016/j.bushor.2016.11.009